Kopano-server crashes

-

Good Morning everyone,

now, after 17 hours it looks good kopano-server did not crash since then.

So I think we found the problem: kopano-search is causing the memory flood in kopano-server.But why? As Coffee_is_life wrote on his setup after completing the index process everything worked fine. Perhaps this is the same with my setup. But my indexing is running for two weeks now. :-(

Do you have any ideas what to tweek in this case?

-

After 4 days without kopano-search kopano-server did not crash anymore.

But the memory keeps still rising much more slowly…

Any thoughts?

-

I also (still?) have this problem. Currently using 8.6.81.171-0+25.1 on Ubuntu 18.04 x64.

[Fri Sep 28 19:51:29 2018] Out of memory: Kill process 1240 (kopano-server) score 902 or sacrifice child

[Fri Sep 28 19:51:29 2018] Killed process 1240 (kopano-server) total-vm:26514968kB, anon-rss:10946196kB, file-rss:0kB, shmem-rss:0kBBy the time it had crashed it was running only for 11 hours or so - after having been restarted due to a kopano-server crash the day before. The server should have enough memory for normal use (12GB) and an additional 16GB Swap is available. Especially with the swap I really see no reason at all for it to run out of memory.

I will now try to update to 8.6.81.435-0+75.1 in the hope that this out of memory bug has been fixed in the meantime?

In addition I’ve also cleaned the /var/lib/kopano/search/ directory and restarted the indexing process. Maybe index is corrupted?

Update: Has not changed/fixed anything. Server still crashing about once a day. Redoing the index didn’t help, neither did disabling the indexing of attachments.

I’ve added the following to the kopano-server.service file for a temporary fix:

[add in the service section]

Restart=on-failure

RestartSec=10s[add in the unit section]

StartLimitIntervalSec=300s

StartLimitBurst=2 -

Thank you for your message.

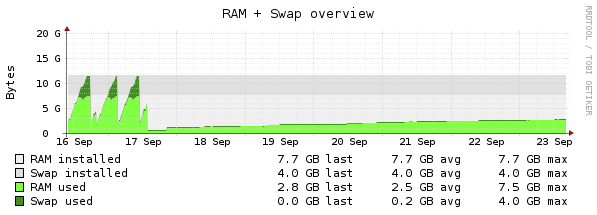

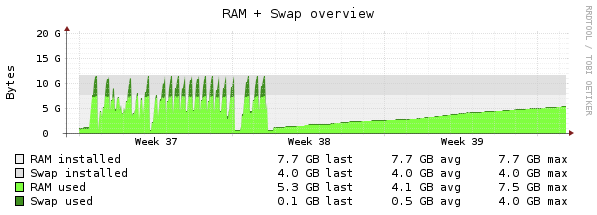

Here is a graph of the memory consumption:

- In the first half you see the memory rising very quickly till the server process crashed. It restarted automatically after modfying the kopano-server.service file.

- In the second half (~ week 38) I didn’t start the kopano-search process. The memory is still rising but much slower.

So I think it is not a problem with the search process. It seems that the search process simply speeds up the rising of the memory usage. If I had more users or much more interaction with the server process, perhaps the memory would be rising faster again.

So it looks like a problem in the kopano-server.

Just for interest, what hardware do you use, Gerald? Is it virtualised hardware? If yes which kind (VMware, HyperV,…)?

Regards,

Alex

-

@sniks6 said in Kopano-server crashes:

Just for interest, what hardware do you use, Gerald? Is it virtualised hardware? If yes which kind (VMware, HyperV,…)?

I’m using VMware ESXi (Free Edition), currently version 6.5.0 U1 (Build 7967591). The VM has the open-vm-tools package installed.

I don’t think the behavior would change on physical hardware though.Update: crashed again:

[Tue Oct 2 12:01:22 2018] Out of memory: Kill process 2038 (kopano-server) score 848 or sacrifice child

but my modifications to automatically restart the process worked so I guess nobody even noticed.Just 6 hours later memory usage for the kopano-server process was again up to 5.6GB, after restarting kopano-server memory usage is down to 350MB. This thing is leaking like crazy! At this rate even throwing 32GB at it would not really help for long.

Update2: regarding kopano on physical hardware

I just checked the kopano I’m using for my personal email, which is on physical hardware (Atom-based Quadcore NUC with 8GB RAM) and here kopano-server is hogging about 3GB of memory after running for 48 hours under low load (about 3 users with 3 devices each). Also an Ubuntu 18.04 system by the way. -

@Gerald: Thanks for your infos. :-)

Isn’t there anybody who could give us a hint, where we could tweak or check what could cause this memory problem?

-

@sniks6 said in Kopano-server crashes:

Isn’t there anybody who could give us a hint, where we could tweak or check what could cause this memory problem?

This does not look like there is something to tweak, but this rather is a memory leak somewhere in the master branch. We want to branch of a release soon, so we eventually will need to look into it.

I’ve created https://jira.kopano.io/browse/KC-1299 to track progress.

-

Thanks for your message, Felix.

If it is helpful, I could provide you more info from my system.I am happy to help.

-

We have plugged a first mem leak over the weekend, the fix should be included with the next update of the nightly packages: https://stash.kopano.io/projects/KC/repos/kopanocore/commits/57dd3acd8e04695137cd2f86bc9f173cbf242aa1

-

we fixed an important memory leak in master only two weeks ago… looking at the timing of above comments, it seems could have well been the cause of some of these issues?

commit 30b9996155fcad78d41cf79aa399867252b75e96

Merge: 4d16ca5c7 57dd3acd8

Author: Joost Hopmans j.hopmans@kopano.com

Date: Fri Oct 5 16:16:32 2018 +0200Merge pull request #2264 in KC/kopanocore from ~JENGELHARDT/kc:leak to master * commit '57dd3acd8e04695137cd2f86bc9f173cbf242aa1': libserver: plug a memory leak in loadObjectyeah, when doing initial syncing with search, that would probably uncover a memleak much faster than under average load…

-

Hi Mark,

thank you for your answer. Right now I am on holidays. But when I am back I will try the actual nightly build and report about it.Regards, Alex

-

Hi there,

just came back from my holidays.

I wanted to install the latest nightly build “core-8.7.80.12_0+11-Debian_9.0-amd64”.Unfortunately there is libkcpyplug0 missing. So I could not run the spooler or dagent.

Could you please include the libkcpyplug0 again?

Thanks.

-

that was fixed on Oct 24.

» tar -tf core-8.7.80.12_0+11-Debian_9.0-amd64.tar.gz | grep pypl core-8.7.80.12_0+11-Debian_9.0-amd64/./libkcpyplug0-dbgsym_8.7.80.12-0+11.1_amd64.deb core-8.7.80.12_0+11-Debian_9.0-amd64/./libkcpyplug0_8.7.80.12-0+11.1_amd64.deb