Problem with moved Attachments from Local Store to s3 Storage

-

I have a short question. Some Week’s ago i have moved the attachments of a kopano server from local storage to s3 storage. First i copied the exact structure from the attachment folder to the s3 storage, what not worked well as i also read here somewhere in the forum, that the structure on s3 storage is flat, without subfolder. So i uploaded all files again to the s3 storage with a little script , which removes the folder structure. This worked for the most users. Then i detected that their is a problem with some gz packed attachements, after i gunzip’ed the file and re-upload it, this also worked.

But now i have still one user, which had old mails with attachments, which he can’t open. Is their possible to enabled some more informations in the logfiles?

/var/log/kopano/server.log gives me only the following:

Fri Dec 6 09:13:32 2019: [error ] S3: Amazon return status ErrorNoSuchKey, error: <unknown>, resource: "<none>" Fri Dec 6 09:13:32 2019: [error ] S3: Amazon error details: BucketName: kopano_attachments_xxx Fri Dec 6 09:13:32 2019: [error ] S3: Amazon error details: RequestId: tx00000000000000015018b-005dea0dac-1234test1234 Fri Dec 6 09:13:32 2019: [error ] S3: Amazon error details: HostId: 1234test1234 Fri Dec 6 09:13:32 2019: [error ] SerializeObject failed with error code 0x8000000e for object 1127442Here is my proof of concept script:

#!/bin/bash SOURCE=/data/kopano/attachments DEST=s3://kopano_attachments S3PARAMS="--skip-existing --no-check-md5" ### function process_file { echo "process file '${1}'" SOURCE_FILE=$1 DEST_FILE=$(basename $1) if [[ $SOURCE_FILE =~ \.gz$ ]]; then echo "gzip file detected, gunzip." gunzip ${SOURCE_FILE} SOURCE_FILE=$(echo $SOURCE_FILE | sed 's/.gz//g') DEST_FILE=$(basename $SOURCE_FILE) fi echo "copy ${SOURCE_FILE} to ${DEST}/attachments/${DEST_FILE}" CHECK_GZIP=$(s3cmd ls ${DEST}/attachments/${DEST_FILE} | grep ".gz" | wc -l) if [ ${CHECK_GZIP} -eq 1 ]; then echo "remove gzip file on s3 storage" s3cmd rm ${DEST}/attachments/${DEST_FILE}.gz >> /tmp/upload.log fi s3cmd -v put ${SOURCE_FILE} ${DEST}/attachments/${DEST_FILE} >> /tmp/upload.log } function process { SOURCE_DIR=$1 for file in $(find ${SOURCE_DIR} -type f); do process_file "$file" done } ### for dir in $(find /data/kopano/attachments -type d); do process "$dir" doneThe script is not optimized , so some stuff coul’d be wrote simpler.

-

Hi @Beleggrodion,

we do not really support moving between attachment backends. If you have a subscription I would recommend to reach to our support and professional services, so that they can have a look at your system.

-

You can enable

log_level=6inkopano-server.cfgto get more S3 messages. -

Hi buddy, you are performing via which logs?

Regards,

Jimi Smith -

looking at this code:

https://github.com/Kopano-dev/kopano-core/blob/master/provider/libserver/ECS3Attachment.cpp

do I understand correct that s3 storage adapter does not support gzip compressed attachments at all? If not, why not? -

The answer is quite boring: No one asked for it yet.

-

@jengelh

it’s quite crazy to hear, as people are obviously moving from file storage to s3 storage and have to unpack all their gzips, and considering the usual compression rate of the attachments this probably increases their S3 bills tenfold… In my case from 1TB to ~10TB -

S3 is terrible. More specifically,

- AWS-S3 is terrible: The more remote you go, the higher the access latency, and this happens to add up quickly. When I last dealt with an AWS case in '18, I observed access latencies in the order of 100 ms per attachment.

- I see similar latency when using e.g. sshfs with a private dedicated server, so the AWS observation is not a far-fetched phantasm. But, sshfs has a cache, so on second access, it is just 30ms (of which 22 are for the Internet line itself). To the best of my rememberance, AWS exhibited the big latency on every access, which is why this was so annoying for the customers (of course, someone sent 20 attachments in one mail, so that’s that).

- S3-the-API is terrible: it is lacking the atomic rename operation, so it cannot support concurrent access by multiple kopano-servers and you cannot get the benefits of that (cross-server deduplication). Many other distributed filesystems honor it, based on what their documentation suggests, some of which can be used with just the

files_v2driver. - Deduplication (even within just one server) is a bigger win on the long run than compression alone. Ideally both are combined—perhaps in a future files_v3.

-

In my case the Kopano server itself is on AWS, latency is acceptable for our customers. Only a single server so concurrent access is not an issue. Deduplication as in files_v2 can be easily added to S3 backend as well.

-

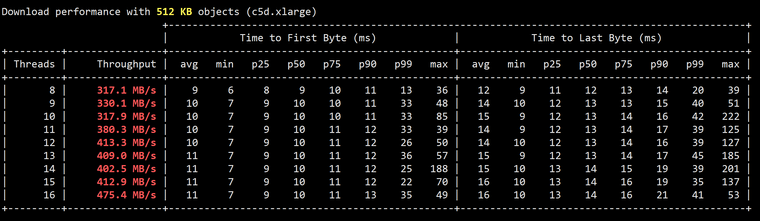

@jengelh - S3 latencies and throughput from our Kopano server instance attached, tested with

https://github.com/dvassallo/s3-benchmark

-

@NeGrusti said in Problem with moved Attachments from Local Store to s3 Storage:

Deduplication as in files_v2 can be easily added to S3 backend as well.

In a way that is race-free with respect to, and does not DoS other kopano-server in a multiserver? Try me.

In my case the Kopano server itself is on AWS

Well ok, that’s not a case of “very remote”, so it will pan out. Though I presume it will not be much different, one should measure at the ECS3Attachment.cpp location rather than with s3-benchmark.

-

Hi @negrusti

Do you have an an anser to your question reagarding Gzip?

I’m tryiing to move as well to an S3 compatible storage (wasabi) and I’m wondeing, if I have to unzip first all attachments, How to doit?